One persistent confusion about estimands is the notion that, by stating a target population, we are somehow assuming that studies (trials in particular) involve random sampling from that population.

This is set against a narrative, reasonably prominent among trialists, that studies being ‘not-representative’ is a good thing. The assertion prompts two immediate questions:

Not-representative of what?

Why is that a good thing?

A few thoughts on these questions and what I see as the confusion.

Non-representative of what?

I’ll talk about trials here. I can’t tell if this is a cantankerous, intentional misunderstanding, but when we talk about the target population, people sometimes interpret it as meaning ‘the general population’. No-one with so little understanding should be discussing this stuff but here we are.

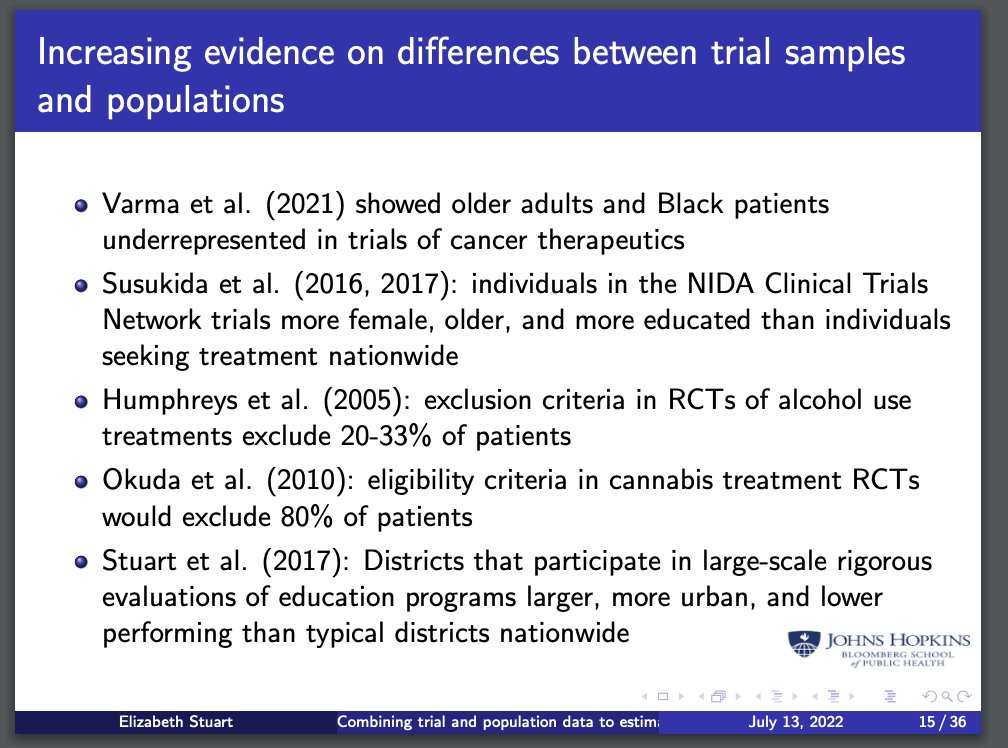

When we say target population, we just mean ‘The group of individuals who would be offered (and want to undergo) an intervention’. Most trials I’ve ever seen have inclusion and exclusion criteria that reflect this and recruit anyone willing. Those who would not be willing to participate are essentially not in the target population (unless they would be willing to take the treatment only when the trial is done; see footnote for an example1). While this is my experience, Liz Stuart brought me down to earth with some counterexamples. Looks like I live in a bubble!

Is non-representativeness actually ‘good’?

The paper that gets wheeled out to argued for non-representativeness is by Rothman, Gallacher and Hatch2. Lots of people I respect like it, so I wonder if they actually read it. See the footnote if you want to know why I hated the beginning quote3. Years ago I made that comment on twitter and Rothman gently encouraged me to read the whole paper. He thought I would find it instructive.

I did not. I initially drafted a point-by-point critique then decided it wasn’t what I want to do with this post, so instead I’ll give my main gripe. The only substantive argument in the paper that non-representativeness is a good thing is an example which provides three options for a study:

a) enrol in a narrow age group only;

b) enrol equal numbers from each of three age groups;

c) enrol the distribution of age seen in the target population.

Here’s a picture.

They describe one advantage of (b) over (c), which (paraphrasing) amounts to more power to detect treatment-effect heterogeneity across age groups. There is another important point, that this sacrifices validity of the average causal effect. That should be an average over the age distribution in the population, and option (b) means the age distribution is mismatched between the study and target populations. This is bizarre. In another place they play off ‘statistical inference’ against ‘scientific inference’, which is odd, but if that’s your view, why base the only substantive argument on statistical power!

Representative treatment effects from non-representative samples?

One argument made by some trialists is that study populations need not be representative; it’s treatment effects that need to be. This point is obviously right but again is not an argument against representativeness. It also makes you wonder: if you know you’re studying a non-representative population, how can you know you’re getting a representative treatment effect?

The early chapters of Hernán and Robins’ book were really good for thinking about this4. Rather than beginning by discussing intuitive ideas like confounding, they unapologetically begin with an example involving Greek gods’ counterfactuals under alternative actions. You can see their individual causal effects, which are not all the same (some are positive, some are negative, some zero), and compute the average. Now, from this group it is absolutely possible to have a non-representative sample that has a representative average causal effect, but that seems an odd thing to leave hostage-to-fortune.

There has been some cool work over the last few years about transporting treatment effects beyond the study population, but ideally we wouldn’t need to do this.

Random sampling?

Trials never randomly sample from a target population (prove me wrong kids)5. For one thing, recruitment is hard, and we tend to take everyone eligible we can get. In most trials, the inclusion and exclusion criteria correspond to those who would be offered future treatment if it looks good. This does not invoke random sampling; it just says that we don’t intentionally exclude groups who would be offered treatment in future (or try to control the distribution of some covariate as in the Rothman, Gallacher and Hatch paper).

There are of course some exceptions. One is that study centres are typically chosen as the ones that are suitably professional. Enough that we can reasonably expect them to follow the protocol and record reliable data. This is a pragmatic choice for the integrity of the trial. If centres that we choose to exclude are systematically different to those that we include, this might induce a mismatch between the population implied by the trial sample and the target population.

As an example: I have coeliac disease. At the moment there is a company (I think) trying to recruit coeliacs to a study of some intervention that means coeliacs can eat gluten without experiencing the immune reaction. This would be life-changing! I and the two other coeliacs I know have been approached and we’d all be delighted to take this thing if it works, but are unwilling to be involved in a study. Lots coeliacs are far less extreme than us and we’re quite happy for them to participate in this study.

KJ Rothman, JEJ Gallacher, EE Hatch, Why representativeness should be avoided. International Journal of Epidemiology. 2013; 42(4):1012–14, https://doi.org/10.1093/ije/dys223

It begins with this quote

The essence of knowledge is generalisation. That rubbing wood in a certain way can produce fire is a knowledge derived by generalisation from individual experiences; the statement means that rubbing wood in this way will always produce fire. The art of discovery is therefore the art of correct generalisation. What is irrelevant, such as the particular shape or size of the piece of wood used, is to be excluded from the generalisation; what is relevant, for example, the dryness of the wood, is to be included in it. The meaning of the term relevant can thus be defined: that is relevant which must be mentioned for the generalisation to be valid. The separation of relevant from irrelevant factors is the beginning of knowledge.

– Hans Reichenbach (Reichenbach, H. The Rise of Scientific Philosophy, 1951, vol. 5, Bognor Regis, UK, University of California Press, pp. 5)

I have a side hustle carving wooden spoons, my big sister is a farmer and my little brother is a carpenter, so I have an obsession with trees and wood. I can claim to know a fair bit more about it than ol’ Reichenbach. My gripe is that things he lists as irrelevant are actually relevant. It’s not just about having dry wood. Matches are long and thin not spherical. They are almost always made from aspen or poplar due to their low burn temperatures, which means they ignite easily (try switching that for beech). Similarly, if you tried to make fire using friction on alder, you might be there for a while; dry wood is not sufficient. So those ‘generalisations’ he lists actually wouldn’t produce fire and are actually a good cautionary tale! He’s probably too dead to defend himself.

Hernán MA, Robins JM (2020). Causal Inference: What If. Boca Raton: Chapman & Hall/CRC.

Ok, individually-randomised trials. Some cluster-randomised trials do because adding more patients within a cluster sometimes adds very little statistical information.